Protocols for the Agentic Era

How A2A and MCP Are Building the Infrastructure for Interoperable AI Agents

A few weeks ago, I wrote about the architectural challenges of building an interoperable "Internet of Agents" - a stack for autonomous systems that can communicate, collaborate, and coordinate across organizational and technical boundaries. In that post, I described how today's agents are siloed within closed ecosystems, with no shared grammar for task delegation, tool invocation, or context sharing. This makes orchestrating agent workflows a brittle mess of glue code, RPCs, and fragile assumptions.

Now, two protocols are emerging to change that: Google’s Agent2Agent (A2A) and Anthropic’s Model Context Protocol (MCP). They tackle different problems but together, they sketch a blueprint for truly interoperable AI systems.

Let’s unpack what they are, what they’re not, and why you likely need both.

The Interop Gap: Why We Need Agent Protocols

Imagine building a multi-agent system: a calendar agent that delegates to a travel agent, which calls a flight-pricing tool, which updates a CRM.

Sounds simple until you realise:

There’s no shared grammar for delegating tasks.

No standard way to discover agent capabilities.

No secure context-passing or error handling.

Tool APIs are inconsistent and bound to specific runtimes.

This is where A2A and MCP come in. Think of them as the agent-native equivalents of HTTP and gRPC - protocols that let agents talk to each other, and access tools safely.

Protocol Basics: A Tale of Two Layers

A2A focuses on orchestration. It defines how agents exchange tasks, discover capabilities, and share results.

MCP focuses on augmentation. It standardizes how agents access tools, retrieve context, and execute functions.

Here's what each enables:

A2A: A Protocol for Talking Agents

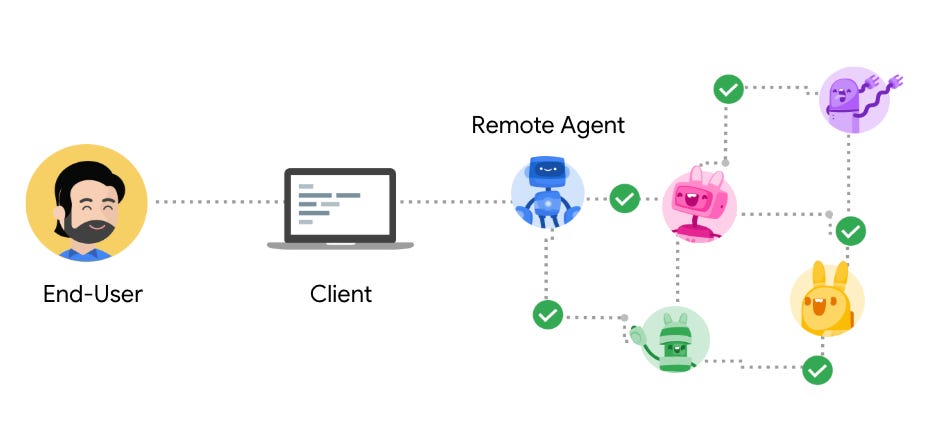

A2A is Google's proposal for a shared interface between AI agents — regardless of framework or vendor. It’s built around the idea of an Agent Card, which publishes what an agent can do, how to reach it, and what formats it accepts.

At the heart of A2A is the Task abstraction:

A task has a lifecycle (

submitted,working,input-required,completed, etc.).It contains messages (conversation turns) and artifacts (results).

Agents can pause, request input, delegate, or stream results.

Critically, A2A treats agents as opaque black boxes. It doesn’t assume internal state sharing or tool access. It just handles messaging, coordination, and secure communication across boundaries.

Auto Repair Shop Analogy

An A2A agent is like a mechanic at a garage. You (the client) say, "My car is rattling." The mechanic might ask follow-up questions, consult a supplier, or loop in a colleague — all via structured back-and-forth conversations.

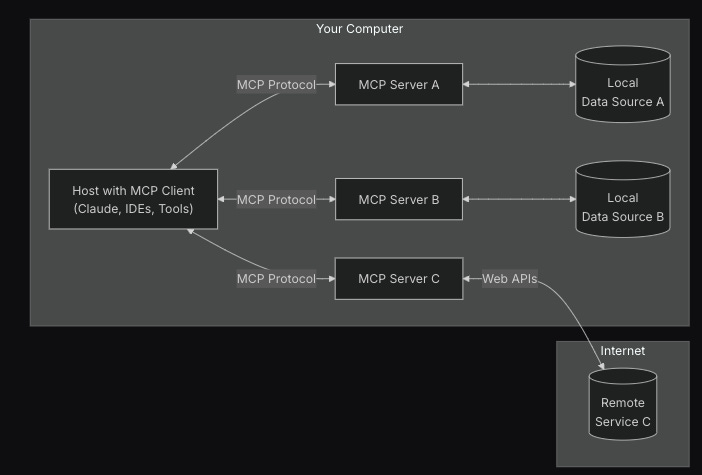

MCP: The Tool Protocol

MCP, by contrast, is about structured integration. It standardises how agents call tools, access resources, and compose prompts across vendor boundaries.

It defines primitives like:

Tools: Executable functions with structured input/output.

Resources: Data made accessible to the agent.

Prompts: Template-based workflows.

Where A2A handles dialogue, MCP handles action. Tools in MCP are designed to be invoked by LLMs — not just as raw HTTP endpoints, but in a semantically meaningful, predictable way.

Note: I’ve covered MCP in detail in one of my previous posts:

Protocol Interplay: When You Need Both

Let’s say your agent receives an A2A task:

“My car is making a rattling noise.”

Using A2A, your agent can:

Ask clarifying questions.

Collaborate with a parts supplier agent.

Using MCP, it can:

Call

run_diagnostic_scan(engine)to retrieve fault codes.Use

raise_vehicle_lift(2.0)to inspect the undercarriage.

Think of A2A as the messenger and MCP as the mechanic’s toolbox.

These protocols are complementary, not redundant. A2A helps agents converse; MCP helps them do things.

Design Pattern: A2A Agents as MCP Resources

Google’s docs propose an elegant integration pattern: model each A2A agent as an MCP resource.

This lets you:

Discover agents via MCP.

Hand off communication to A2A once selected.

It’s like discovering a microservice, then opening a persistent channel to it.

Implementation Tips

Keep boundaries clean:

Use A2A for agent communication; MCP for tools.

Align content types:

A2A uses flexible content parts (text, files, data); MCP expects structured schemas.

Design for latency and scale:

Stream results. Batch MCP calls. Use push for disconnection-tolerant flows.

Auth mismatch warning:

A2A uses OpenAPI-based schemes; MCP expects bespoke flows. Align headers and tokens.

Observability matters:

Track correlation IDs and span durations across both protocols.

Looking Ahead

These protocols are early — but promising. Expect:

Agent marketplaces using A2A.

Tool registries powered by MCP.

Mixed-initiative workflows blending humans, agents, and tools.

The protocols are quietly doing what TCP/IP did for networks — abstracting away the painful glue, and making systems composable.

The future of AI isn't monolithic - it's modular, composable, and collaborative. By implementing both A2A and MCP, you can build systems that:

Talk across organizational boundaries

Leverage specialized capabilities from multiple vendors

Provide secure, focused access to tools and data

Create seamless experiences for users

Protocols might seem like dry technical details, but they're the foundation of ecosystems. Just as HTTP enabled the web, A2A and MCP will enable the next generation of AI systems - working together rather than competing.

What agent-to-agent interactions are you most excited to build? How might these complementary protocols change your approach to AI system design?