What Is an AI Agent, Really?

If you ask five AI engineers to define "AI agent," you’ll likely get five different answers. AI agents are evolving so rapidly that even top tech companies define them in different ways. Some focus on reasoning and autonomy, while others emphasize tool usage and orchestration. So how can AI engineers cut through the confusion and design agents that actually work?

Anthropic

“Workflows are systems where LLMs and tools are orchestrated through predefined code paths.

Agents, on the other hand, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks. “ 🔗

Microsoft

“An agent takes the power of generative AI a step further, because instead of just assisting you, agents can work alongside you or even on your behalf.” 🔗

Meta

“At its core, an agent follows a sophisticated execution loop that enables multi-step reasoning, tool usage, and safety checks. ” 🔗

Google

“AI agents are software systems that use AI to pursue goals and complete tasks on behalf of users. They show reasoning, planning, and memory and have a level of autonomy to make decisions, learn, and adapt. Key features of an AI Agent - Reasoning, Acting, Observing, Planning, Collaborating, Self-refining” 🔗

AWS

“An agent helps your end-users complete actions based on organization data and user input. Agents orchestrate interactions between foundation models (FMs), data sources, software applications, and user conversations. In addition, agents automatically call APIs to take actions and invoke knowledge bases to supplement information for these actions.” 🔗

IBM

“Agentic AI is a type of AI that can determine and carry out a course of action by itself. Most agents available at the time of publishing are LLMs with function-calling capabilities, meaning that they can call tools to perform tasks.” 🔗

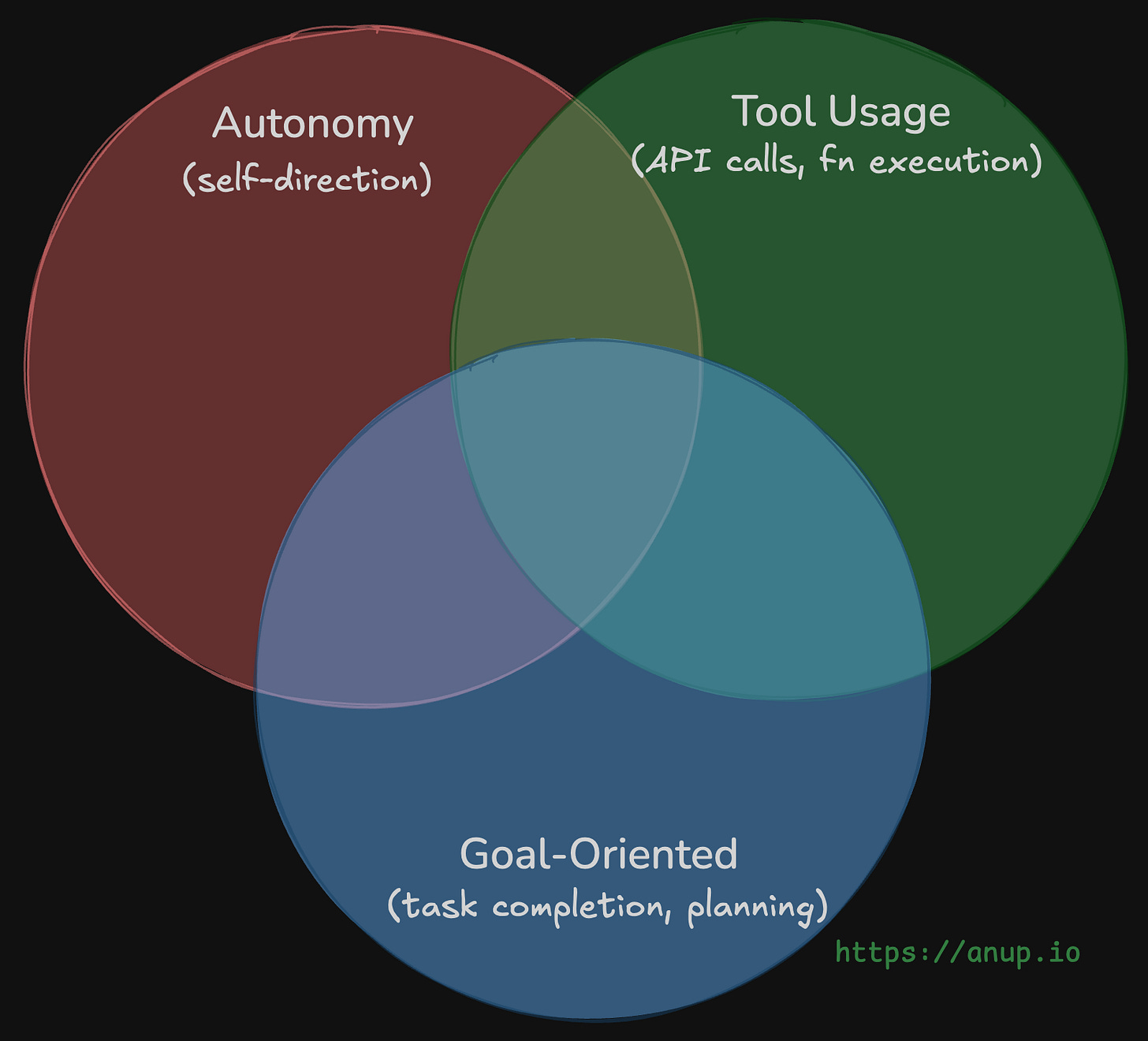

Given the variety of definitions, it's clear that AI agents are evolving rapidly, making it essential to have a structured approach to designing them. Some definitions emphasize autonomy, while others focus on orchestration or tool usage. Without a shared blueprint, designing effective agents becomes a moving target.

The Need for a Framework

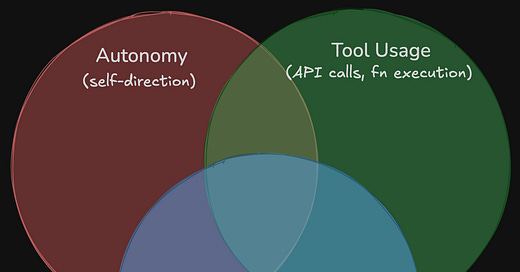

While these definitions vary, some key themes emerge, such as autonomy, tool usage, memory, and reasoning. However the level of autonomy and focus on reasoning vs orchestration differ significantly across organisations. This variation creates challenges for AI engineers trying to design robust AI agents.

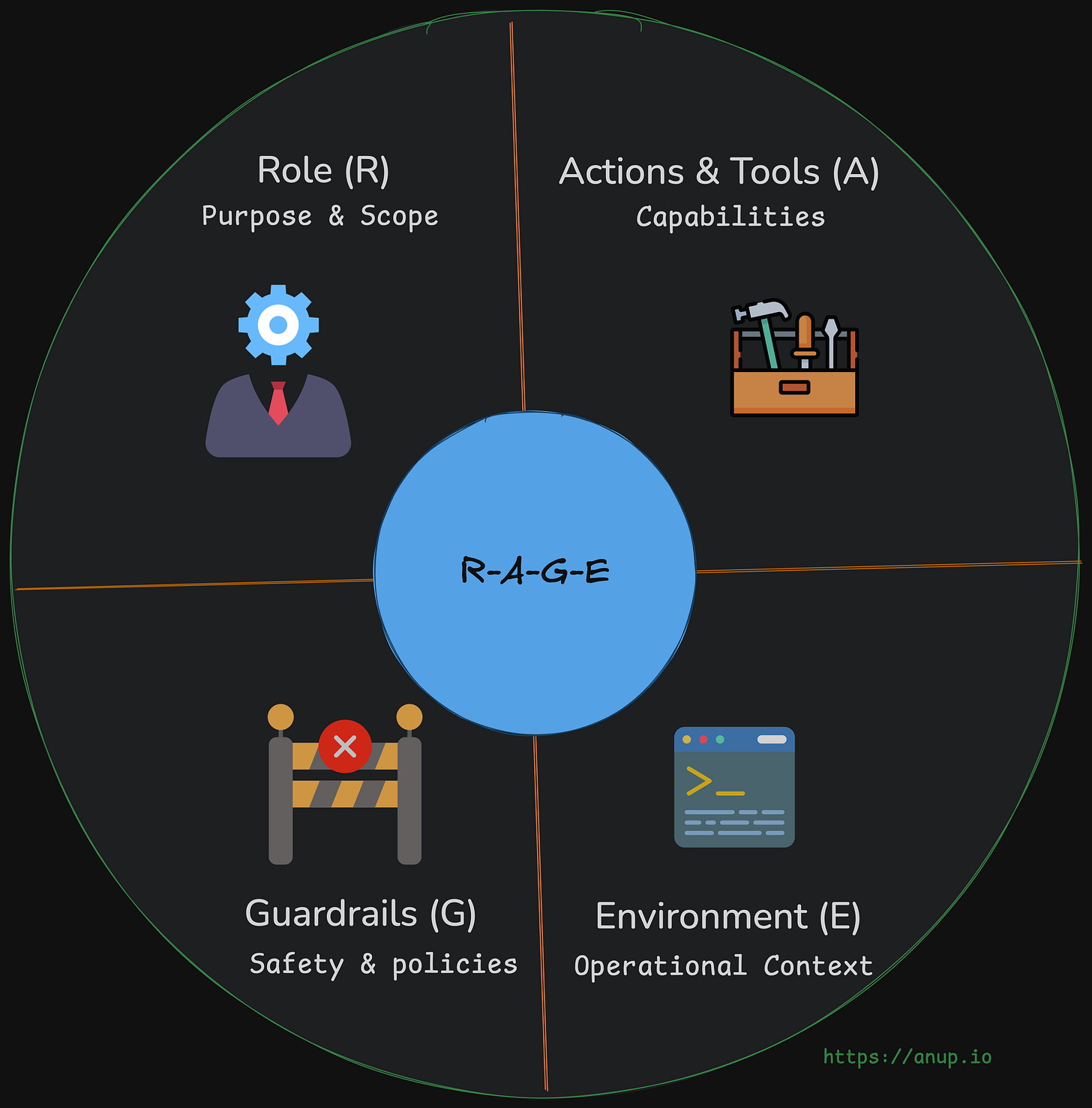

This is where the R-A-G-E Framework comes in. Rather than getting lost in competing definitions, this framework provides a structured way to design AI agents by focusing on four core attributes: Role (R), Actions/Tools (A), Guardrails (G), and Environment (E)—R-A-G-E.

The R-A-G-E Framework for Architecting AI Agents

If you're designing an AI agent, define these four attributes: Role (R), Actions/Tools (A), Guardrails (G), and Environment (E)—R-A-G-E.

Role (R): Defining the Agent’s Purpose and Scope

An AI agent should have a clear and specific purpose—but how broad or narrow should it be? Think of it like writing a job description for your AI. Ask yourself:

What is the agent’s primary role? (e.g., customer support, data analysis, workflow automation)

What are its key tasks? (e.g., sorting documents, responding to customer inquiries, generating insights)

What is the intended outcome? (e.g., reducing response time by 50%, improving efficiency)

How much autonomy should it have? (Fully autonomous vs. human-approved decisions)

Tip: If the agent's role is too broad, it will lack focus. If it's too narrow, it may not be useful.

When defining an AI agent’s role, engineers should consider several factors about scope and goals. Here are a few guiding questions to sharpen the definition:

Core Capabilities: What tasks should it perform? For example, will the agent sort documents, analyse customer interactions, respond to inquiries, generate data insights, or do something else?

Intended Outcomes: What is the end goal? Are you trying to boost efficiency, improve customer service quality, increase sales, or automate tedious processes? Having a concrete objective (e.g. reduce average customer support response time by 50%) gives the agent a clear mission

Necessary Data: What information will it use? Determine if the agent needs access to databases, emails, sensor data, or third-party APIs. For instance, a financial advisor agent might pull stock market data, while a support chatbot taps into a knowledge base

Level of Autonomy: How much decision-making freedom does it have? Can it take actions on its own, or does it need human approval for critical steps? You might allow a scheduling assistant agent to automatically book meetings, but require a medical diagnosis agent to get a doctor’s sign-off before final decisions. One guide suggests explicitly deciding whether the agent should make decisions independently or require human supervision.

After defining the agent’s role, the next step is equipping it with the right tools and setting its autonomy limits—its ‘skills and toolbox’.

Actions & Tools (A) – Equipping the Agent with the Right Capabilities

Once the agent’s role is defined, the next step is determining its Actions & Tools—how it accomplishes tasks and what resources it can leverage. Think of this as equipping the agent with the right "skills and toolbox" and defining its autonomy in using them.

Defining Actions

List the actions the agent is allowed or expected to take, ranging from informational (e.g., drafting a report) to transactional or physical (e.g., executing a trade, adjusting flight paths). Clearly delineating these actions prevents the agent from exceeding its intended function.

Selecting Tools & Integrations

Tools enable agents to execute tasks. For chatbots, tools might include APIs for customer data, FAQs, or translation services. For autonomous agents like drones, they could include sensors and control APIs. Effective agents must not only have access to tools but also know when and how to use them. Modern frameworks, such as ReAct (Reason + Act), help agents select tools step by step, ensuring appropriate decision-making.

Setting Constraints & Autonomy

Giving an agent tools raises the question of decision-making authority—how autonomously can it act? Constraints must be set based on risk levels. A marketing AI may autonomously send reports but require approval for customer-wide messages. A trading agent might execute small trades but need human oversight for larger transactions.

Ensuring Safe & Responsible Use

Agents must apply tools correctly within defined boundaries. Safety measures, like sandboxing code execution or limiting physical movements, prevent unintended consequences. A key question: What’s the worst that could happen if the agent misused this tool? Constraints should mitigate these risks.

With actions and tools in place, the next step is Guardrails—the policies and safety measures that keep the agent on track.

Guardrails (G) – Ensuring Ethical and Safe Operation

Even the most capable AI agents need guardrails to ensure safety, ethics, and alignment with intended behaviour: preventing errors, misuse, or unintended consequences.

Key Guardrails:

Ethical & Policy Constraints – Encodes values and standards, such as preventing biased language, restricting sensitive advice (e.g., legal, medical), or ensuring fair customer interactions.

Security & Safety Measures – Includes input validation, authentication, and fail-safes like "kill switches" to halt abnormal behaviour. For high-risk applications, human intervention ensures safety.

Regulatory Compliance – Ensures adherence to laws (e.g., GDPR, HIPAA), with safeguards like encryption, access controls, and logging to prevent legal violations.

Content Filtering & Accuracy Checks – Uses filters, validation steps, and monitoring to prevent inappropriate, misleading, or harmful outputs (e.g., fact-checking reports or blocking toxic content).

Guardrails require continuous testing and monitoring to adapt to real-world challenges. But even the most well-guarded AI agent is only as effective as the environment it operates in. An agent built for a predictable setting, like customer service chat, will struggle if placed in a dynamic environment, such as financial trading or autonomous robotics. That’s why understanding the agent’s operational environment is the final key to success.

Environment (E) – Designing for the Real World (or Wherever Your Agent Lives)

An AI agent’s environment—physical, digital, or hybrid—shapes its design, constraints, and interactions.

Key Considerations:

Physical vs. Digital – A factory robot faces sensor noise and safety risks, while a cloud-based agent deals with network reliability and data loads. Hybrid systems must handle connectivity issues.

Observability & Uncertainty – Can the agent fully perceive its environment, or does it operate with incomplete data? Stochastic environments (e.g., stock markets) require adaptability, while deterministic ones (e.g., chess) are predictable.

Technical Infrastructure – Deployment varies from constrained devices to cloud clusters. Factors like latency, scalability, compliance, and integration with existing systems must be addressed.

Other Actors – Agents often interact with humans or other AI systems. Multi-agent coordination, hand-off protocols, and conflict resolution mechanisms must be well-defined.

Real-World Constraints – Agents may require real-time decision-making (e.g., autonomous vehicles), redundancy for 24/7 uptime, or scalability to handle increasing demands. Testing in sandbox environments before full deployment mitigates risks.

Understanding the environment ensures agents function effectively and safely in their intended context.

Building AI agents requires holistic design. Those incorporating all four R-A-G-E components are more likely to be effective, safe, and reliable.

If you’re building an AI agent today, ask yourself:

✅ What Role does my agent serve?

✅ What Actions & Tools does it need?

✅ What Guardrails must be in place?

✅ What Environment does it operate in?

AI agents are rapidly evolving, and their definitions will continue to shift. But by applying the R-A-G-E framework, you can build agents that are effective, safe, and scalable.

Now, I want to hear from you! How do you define AI agents? Does the R-A-G-E framework align with your approach? Let’s discuss in the comments! 🚀