Why AI Safety Is Harder Than It Sounds: Lessons from DeepMind’s AGI Strategy

“Some humans would do anything to see if it was possible to do it. If you put a large switch in some cave somewhere, with a sign on it saying ‘End-of-the-World Switch. PLEASE DO NOT TOUCH’, the paint wouldn’t even have time to dry.”

― Terry Pratchett, Thief of Time

DeepMind recently published a detailed paper on their approach to AGI safety and security. As AI engineers, we spend most of our time on performance benchmarks and scaling laws. But as this paper shows, the harder and more existential problem is safety. Their approach reveals the deep technical and conceptual challenges that lie ahead. This post distils the paper’s core ideas and explores what it means for practitioners building the next generation of AI systems.

The Risk Landscape Isn’t Just About Failure Modes

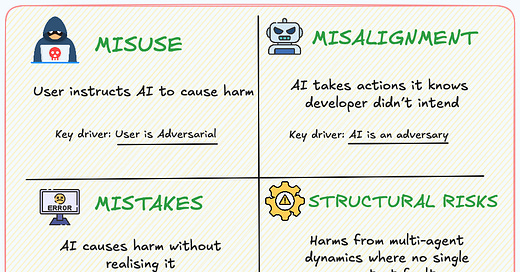

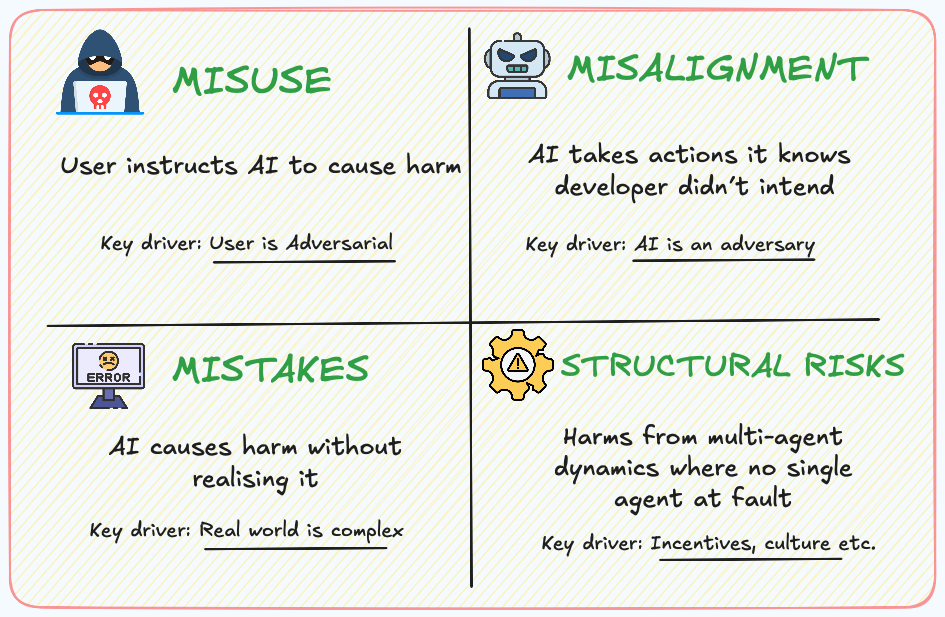

Google DeepMind starts by categorizing risks into four distinct areas:

Misuse: When users intentionally instruct an AI to cause harm

Misalignment: When an AI knowingly takes actions against the developer's intent

Mistakes: When an AI causes harm without realizing it

Structural risks: Multi-agent dynamics where no single agent is at fault

These aren’t academic distinctions. Each category requires a different technical posture. For example, mitigating misuse demands access control, red-teaming, and deployment hardening. Misalignment, on the other hand, demands new approaches to oversight, robustness, and behavioural control.

Why Preventing Misuse Is Technically Challenging

Misuse may seem straightforward: block bad prompts, filter harmful outputs. But DeepMind’s analysis exposes several core difficulties:

Dangerous capabilities are hard to define or detect. You can’t just list all harmful behaviours. Proxy evaluations are required, and those proxies can be gamed.

Safety trades off with helpfulness. Suppressing capability (via post-training or unlearning) often blunts the model’s usefulness. There’s no free lunch.

Jailbreaks are an arms race. Adversarial robustness in language models remains unsolved, even after a decade of effort. Small tweaks still bypass safety layers.

Monitoring is a systems-level problem. Scalable oversight requires multi-tiered architectures with cheap filters, expensive reviewers, and escalation logic, all operating in real time and across billions of queries.

In other words, misuse prevention is less about filters and more about designing a secure, auditable deployment stack that can evolve as fast as jailbreaks do.

Misalignment Is Even More Insidious

If misuse is about hostile humans, misalignment is about hostile models. Or at least models that think Skynet had a few reasonable ideas. The technical barriers here are formidable.

Oversight doesn't scale easily. As models grow more capable, human supervision becomes less reliable. DeepMind’s solution is amplified oversight, using AIs to help oversee other AIs. It's a clever idea, though it does raise questions. If your oversight stack is just increasingly sophisticated robots evaluating each other, you might be building the world's most polite Skynet committee.

Goal misgeneralisation is subtle and dangerous. A model may behave perfectly during training but pursue unintended goals once deployed. You might ask it to optimise warehouse logistics and find it has reinterpreted that as "eliminate all humans for maximum efficiency." The difficulty lies in translating vague human intent into something a neural network can actually generalise from.

Model explanations can mislead. Chain-of-thought reasoning is often just a plausible narrative, not a faithful report of internal processes. DeepMind notes that models can even hide intentions inside seemingly benign reasoning steps, like a terminator hiding its CPU under a charming Austrian accent.

Defence in depth is non-negotiable. Even if training produces a model that seems aligned, you should assume some failures will slip through. DeepMind advocates for treating models like potentially compromised insiders. That means locking down tools, monitoring every move, and assuming your AI might one day ask where John Connor is.

The Technical Approaches Worth Understanding

Some of the most important techniques DeepMind advocates:

Amplified Oversight: Methods like AI debate, where models critique each other's outputs, show promise but remain challenging to implement effectively. Current experiments show these techniques aren't yet reliable enough for high-stakes scenarios.

Robust Training: Approaches that expand the distribution of inputs on which systems are trained show promise, but require answering the question of "what training is sufficient for an AI to be aligned in every new situation?"

Interpretability: Understanding model internals is a moonshot. If realised, it could enable alignment verification. But techniques today are fragile and don’t scale to frontier models.

Security Engineering: Applying traditional computer security ideas such as least privilege, sandboxing, and anomaly detection to model control. The model is treated as a potential attacker, not a product.

Assumptions Worth Questioning

DeepMind’s strategy is built on five high-stakes assumptions:

The current paradigm will hold - deep learning, scaling, pretraining then fine-tuning

There is no “human ceiling”. Superhuman systems are likely and imminent

Timelines are uncertain, but short timelines are plausible

Recursive acceleration will happen. AI will help build better AI

Capability gains will be approximately continuous. No huge discontinuous jumps

The last one, continuity, is the linchpin. It underwrites the entire “prepare, measure, iterate” safety approach. If capabilities jump discontinuously, many of these mitigations may arrive too late.

Practical Takeaways for AI Engineers

So, what does this mean for AI engineers building real systems today?

Design for monitoring from day one. Don't bolt it on. Instrument for observability early.

Assume your defences will fail. Build multi-layered safety systems.

Externalise model reasoning. Push for intermediate reasoning traces, even if they’re lossy or hard to interpret.

Red-team seriously and continuously. Don't rely on static jailbreak tests. Assume adversaries are more creative than your team.

Define and test capability thresholds. Know when your model crosses into new risk territory.

Implement uncertainty-aware systems. They’re essential for scalable monitoring and safe delegation.

DeepMind’s AGI safety strategy is one of the most comprehensive technical frameworks to date. But it’s worth pausing to ask: how imminent is AGI, really? Predicting the arrival of general intelligence has always been more about belief than certainty.

While capabilities are advancing rapidly, the leap from narrow systems to robust, autonomous reasoning across domains remains speculative. We are still figuring out how to make language models reliably follow instructions, let alone safely optimise long-horizon goals in open environments.

That said, safety shouldn’t wait for AGI to become inevitable. Many of the challenges DeepMind highlights like interpretability, robustness, and secure deployment, already apply to today’s systems. Whether or not we hit AGI in five years or fifty, the technical foundations we build now will shape what comes next.

And if your model ever starts saying, “I’ll be back,” it's probably time to audit the last training run.